Is heat in your data center forecast?

Is heat in your data center forecast?

Winter may be coming, but it still feels like summer in many data centers.

In some cases, the balmy atmosphere is deliberate, like at Google data centers that run as high as 80 degrees Fahrenheit. But often, rising temperatures unintentionally occur in specific pockets of facilities. While most IT equipment can withstand temperatures of 80 degrees F, which is at the higher end of ASHRAE's recommendations, turning up the heat is not without its risks.

For example, backup-generator batteries should be stored in temperatures of 65-70 degrees F. Any warmer, and battery life may suffer, possibly causing problems following a long-term power outage that requires use of a secondary power source. Furthermore, should cooling infrastructure fail (possibly following an outage), a facility running at 80 degrees will heat up much faster than at 72 degrees F.

That said, optimizing data center temperature is not necessarily about finding a single, universal Goldilocks range. It's more a matter of control: How hot are you capable of running servers without introducing undue risk into your data center? The answer will vary largely based on your existing data center infrastructure.

Temperature monitoring: Back to basics

"In higher-density racks, the more measurement points, the better."

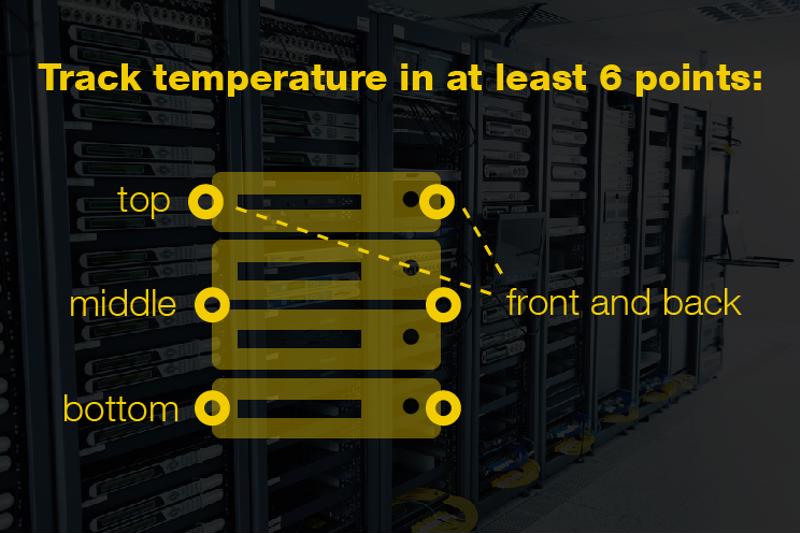

First and foremost, data center managers need accurate assessments of temperature throughout their facilities. To this end, ASHRAE's minimum recommendation is to monitor temperature at three points in each rack: top, bottom and middle.

In practice, though, this means rack temperature would be monitored at six points: the top, bottom and middle at both the front and back (intake and out take). In front-to-back cooling setups, temperatures are higher in the rear of the rack since the front faces the cool-air source. Likewise, the equipment that is mounted highest up on the rack will be farther from the perforated floor tiling that delivers treated air. Thus, temperature sensors must be positioned at the rear of racks to ensure hot air is properly expelled, and at the front of racks to make sure cool air is actually reaching IT equipment.

In higher-density racks, the more measurement points, the better. Ideally, there would be multiple sensors for each server, so as to detect a minor failure (for instance, a broken server fan) before it can impact temperature in nearby racks. This is especially true where equipment runs warm to save energy on cooling. The benefit of efficiency must be counter-balanced by precise and reliable real-time temperature data to help keep temperatures optimized.

Keep airflow top of mind

The ability to track temperature fluctuations is arguably the most important part of solving the problem of hotspots. If you can identify recurring warm pockets of air, you can take steps to address them. That begs the question: What exactly are those steps?

The answer will depend to an extent on the cause of the hotspot, but the majority of these warm pockets are the result of airflow problems:

- Racks placed too far from the CRAC.

- Bypass airflow, or cool air that passes around IT equipment.

- Recirculation air, which results from IT load drawing in cool air after it has mixed with warm air.

Hotspots induced through any of the above situations will not be adequately addressed by dialing up data center cooling capacity. They require a lighter touch, and specifically an active airflow and containment strategy.

This entails the use of containment chambers installed over racks to help maintain zero pressure, so that cool air is continually drawn in while hot air is expelled into return plenums. Active containment relies on pressure sensors that tell fans when to increase RPMs in response to waning airflow. As a result, racks that are positioned farther away from a cool-air source can maintain the pressure needed to keep cool air flowing through them. In this way, bypass airflow is also mitigated, and in turn, recirculation air is resolved as well.

The bottom line is that data center temperature must be managed. If the plan is to operate at the higher end of ASHRAE's recommendations, that's fine, but it also means that the level of temperature and airflow management involved is slightly more intensive.

In other words, it's OK if heat is on the forecast for your data center – so long as you're in control of the situation. That means catching hotspots early, and dealing with them swiftly.